In a dramatic turn of events, Congress is quietly advancing a 10-year federal safe harbor for Big Tech that would block any state and local regulation of artificial intelligence (AI). That safe harbor would give Big Tech another free ride on the backs of artists, authors, consumers, all of us and our children. It would stop cold the enforcement of state laws to protect consumers like the $1.370 billion dollar settlement Google reached with the State of Texas last week for grotesque violations of user privacy. The bill would go up on Big Tech’s trophy wall right next to the DMCA, Section 230 and Title I of the Music Modernization Act.

Introduced through the House Energy and Commerce Committee as part of a broader legislative package branded with President Trump’s economic agenda, this safe harbor would prevent states from enforcing or enacting any laws that address the development, deployment, or oversight of AI systems. While couched as a measure to ensure national uniformity and spur innovation, this proposal carries serious consequences for consumer protection, data privacy, and state sovereignty. It threatens to erase hard-fought state-level protections that shield Americans from exploitative child snooping, data scraping, biometric surveillance, and the unauthorized use of personal and all creative works. This post unpacks how we got here, why it matters, and what can still be done to stop it.

The Origins of the New Safe Harbor

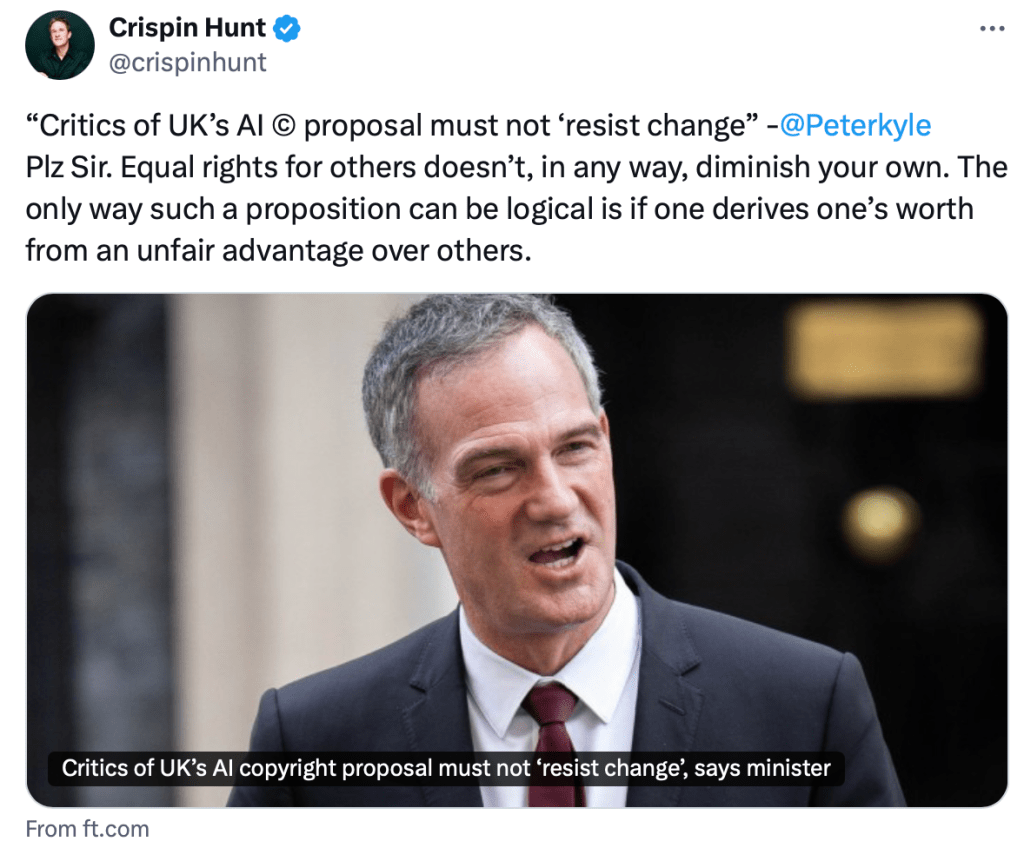

The roots of the latest AI safe harbor lie in a growing push from Silicon Valley-aligned political operatives and venture capital influencers, many of whom fear a patchwork of state-level consumer protection laws that would stop AI data scraping. Among the most vocal proponents is tech entrepreneur-turned White House crypto czar David Sacks, who has advocated for federal preemption of state AI rules in order to protect startup innovation from what he and others call regulatory overreach also known as state “police powers” to protect state residents.

If my name was “Sacks” I’d probably be a bit careful about doing things that could get me fired. His influence reportedly played a role in shaping the safe harbor’s timing and language, leveraging connections on Capitol Hill to attach it to a larger pro-business package of legislation. That package—marketed as a pillar of President Trump’s economic plan—was seen as a convenient vehicle to slip through controversial provisions with minimal scrutiny. You know, let’s sneak one past the boss.

Why This Is Dangerous for Consumers and Creators

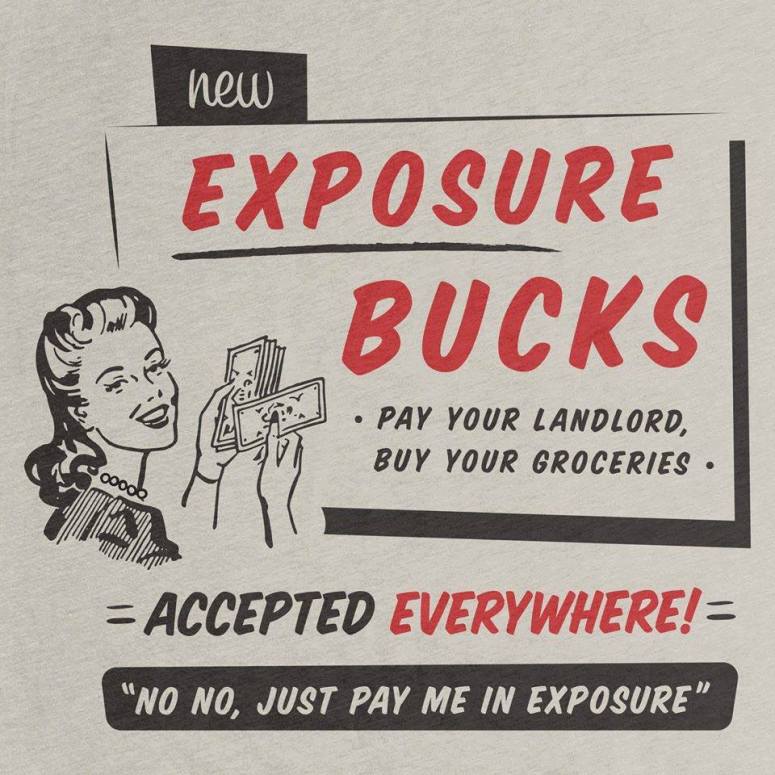

The most immediate danger of the AI safe harbor is its preemption of state protections at a time when AI technologies are accelerating unchecked. States like California, Illinois, and Virginia have enacted—or are considering—laws to limit how companies use AI to analyze facial features, scan emails, extract audio, or mine creative works from social media. The AI mantra is that they can snarf down “publicly available data” which essentially means everything that’s not behind a paywall. Because there is no federal AI regulation yet, state laws are crucial for protecting vulnerable populations, including children whose photos and personal information are shared by parents online. Under the proposed AI safe harbor, such protections would be nullified for 10 years–and don’t think it won’t be renewed.

Without the ability to regulate AI at the state level, we could see our biometric data harvested without consent. Social media posts—including photos of babies, families, and school events—could be scraped and used to train commercial AI systems without transparency or recourse. Creators across all copyright categories could find their works ingested into large language models and generative tools without license or attribution. Emails and other personal communications could be fed into AI systems for profiling, advertising, or predictive decision-making without oversight.

While federal regulation of AI is certainly coming this AI safe harbor includes no immediate substitute. Instead, it freezes state level regulatory development entirely for a decade—an eternity in the technology world—during which time the richest companies in the history of commerce can entrench themselves further with little fear of accountability. And it likely will provide a blueprint for federal legislation when it comes.

A Strategic Misstep for Trump’s Economic Agenda: Populism or Make America Screwed Again?

Ironically, attaching the moratorium to a legislative package meant to symbolize national renewal may ultimately undermine the very populist and sovereignty-based themes that President Trump has championed. By insulating Silicon Valley firms from state scrutiny, the legislation effectively prioritizes the interests of data-rich corporations over the privacy and rights of ordinary Americans. It hands a victory to unelected tech executives and undercuts the authority of governors, state legislators, and attorney generals who have stepped in where federal law has lagged behind. So much for that states are “laboratories of democracy” jazz.

Moreover, the manner in which the safe harbor was advanced legislatively—slipped into what is supposed to be a reconciliation bill without extensive hearings or stakeholder input—is classic pork and classic Beltway maneuvering in smoke filled rooms. Critics from across the political spectrum have noted that such tactics cheapen the integrity of any legislation they touch and reflect the worst of Washington horse-trading.

What Can Be Done to Stop It

The AI safe harbor is not a done deal. There are several procedural and political tools available to block or remove it from the broader legislative package.

1. Committee Intervention – Lawmakers on the House Energy and Commerce Committee or the Rules Committee can offer amendments to strip or revise the moratorium before it proceeds to the full House.

2. House Floor Action – Opponents of the moratorium can offer floor amendments during debate to strike the provision. This requires coordination and support from members across both parties.

3. Senate “Byrd Rule” Challenge and Holds – Because reconciliation bills must be budget-related, the Senate Parliamentarian can strike the safe harbor if it’s deemed “non-germane” which it certainly seems to be. Senators can formally raise this challenge.

4. Conference Committee Negotiation – If different versions of the legislation pass the House and Senate, the final language will be hashed out in conference. There is still time to remove the moratorium here.

5. Public Advocacy – Artists, parents, consumer advocates, and especially state officials can apply pressure through media, petitions, and direct outreach to lawmakers, highlighting the harms and democratic risks of federal preemption. States may be able to sue to block the safe harbor as unconstitutional (see Chris’s discussion of constitutionality) but let’s not wait to get to that point. It must be said that any such litigation poses a threat to Trump’s “Big Beautiful Bill” courtesy of David Sacks.

Conclusion

The AI safe harbor may have been introduced quietly, but there’s a growing backlash from all corners. Its consequences would be anything but subtle. If enacted, it would freeze innovation in AI accountability, strip states of their ability to protect residents, and expose Americans to widespread digital exploitation. While marketed as pro-innovation, the safe harbor looks more like a gift to data-hungry monopolies at the expense of federalist principles and individual rights.

It’s not too late to act, but doing so requires vigilance, transparency, and an insistence that even the most powerful Big Tech oligarchs remain subject to democratic oversight.

You must be logged in to post a comment.