Congress is considering several legislative packages to regulate AI. AI is a system that was launched globally with no safety standards, no threat modeling, and no real oversight. A system that externalized risk onto the public, created enormous security vulnerabilities, and then acted surprised when criminals, hostile states, and bad actors exploited it.

After the damage was done, the same companies that built it told governments not to regulate—because regulation would “stifle innovation.” Instead, they sold us cybersecurity products, compliance frameworks, and risk-management services to fix the problems they created.

Yes, artificial intelligence is a problem. Wait…Oh, no sorry. That’s not AI.

That’s was Internet. And it made the tech bros the richest ruling class in history.

And that’s why some of us are just a little skeptical when the same tech bros are now telling us: “Trust us, this time will be different.” AI will be different, that’s for sure. They’ll get even richer and they’ll rip us off even more this time. Not to mention building small nuclear reactors on government land that we paid for, monopolizing electrical grids that we paid for, and expecting us to fill the landscape with massive power lines that we will pay for.

The topper is that these libertines want no responsibility for anything, and they want to seize control of the levers of government to stop any accountability. But there are some in Congress who are serious about not getting fooled again.

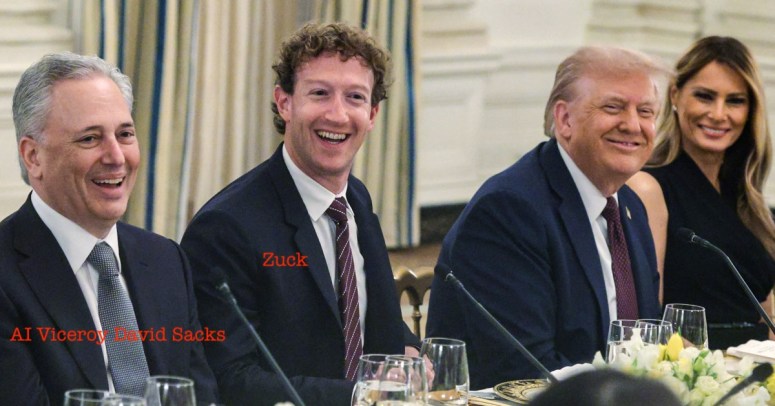

Senator Marsha Blackburn released a summary of legislation she is sponsoring that gives us some cause for hope (read it here courtesy of our friends at the Copyright Alliance). Because her bill might be effective, that means Silicon Valley shills will be all over it to try to water it down and, if at all possible, destroy it. That attack of the shills has already started with Silicon Valley’s AI Viceroy in the Trump White House, a guy you may never have heard of named David Sacks. Know that name. Beware that name.

Senator Blackburn’s bill will do a lot of good things, including for protecting copyright. But the first substantive section of Senator Blackburn’s summary is a game changer. She would establish an obligation on AI platforms to be responsible for known or predictable harm that can befall users of AI products. This is sometimes called a “duty of care.”

Her summary states:

Place a duty of care on AI developers in the design, development, and operation of AI platforms to prevent and mitigate foreseeable harm to users. Additionally, this section requires:

• AI platforms to conduct regular risk assessments of how algorithmic systems, engagement mechanics, and data practices contribute to psychological, physical, financial, and exploitative harms.

• The Federal Trade Commission (FTC) to promulgate rules establishing minimum reasonable safeguards.

At its core, Senator Blackburn’s AI bill tries to force tech companies to play by rules that most other industries have followed for decades: if you design a product that predictably harms people, you have a responsibility to fix it.

That idea is called “products liability.” Simply put, it means companies can’t sell dangerous products and then shrug it off when people get hurt. Sounds logical, right? Sounds like what you would expect would happen if you did the bad thing? Car makers have to worry about the famous exploding gas tanks. Toy manufacturers have to worry about choking hazards. Drug companies have to test side effects. Tobacco companies….well, you know the rest. The law doesn’t demand perfection—but it does demand reasonable care and imposes a “duty of care” on companies that put dangerous products into the public.

Blackburn’s bill would apply that same logic to AI platforms. Yes, the special people would have to follow the same rules as everyone else with no safe harbors.

Instead of treating AI systems as abstract “speech” or neutral tools, the bill treats them as what they are: products with design choices. Those choices that can foreseeably cause psychological harm, financial scams, physical danger, or exploitation. Recommendation algorithms, engagement mechanics, and data practices aren’t accidents. They’re engineered. At tremendous expense. One thing you can be sure of is that if Google’s algorithms behave a certain way, it’s not because the engineers ran out of development money. The same is true of ChatGPT, Grok, etc. On a certain level of reality, this is very likely not guess work or predictability. It’s “known” rather than “should have known.” These people know exactly what their algorithms do. And they do it for the money.

The bill would impose that duty of care on AI developers and platform operators. A duty of care is a basic legal obligation to act reasonably to prevent foreseeable harm. “Foreseeable” doesn’t mean you can predict the exact victim or moment—it means you can anticipate the type of harm that flows to users you target from how the system is built.

To make that duty real, the bill would require companies to conduct regular risk assessments and make them public. These aren’t PR exercises. They would have to evaluate how their algorithms, engagement loops, and data use contribute to harms like addiction, manipulation, fraud, harassment, and exploitation.

They do this already, believe it. What’s different is that they don’t make it public, anymore than Ford made public the internal research that the Pinto’s gas tank was likely to explode. In other words, platforms would have to look honestly at what their systems actually do in the world—not just what they claim to do.

The bill also directs the Federal Trade Commission (FTC) to write rules establishing minimum reasonable safeguards. That’s important because it turns a vague obligation (“be responsible”) into enforceable standards (“here’s what you must do at a minimum”). Think of it as seatbelts and crash tests for AI systems.

So why do tech companies object? Because many of them argue that their algorithms are protected by the First Amendment—that regulating how recommendations work is regulating speech. Yes, that is a load of crap. It’s not just you, it really is BS.

Imagine Ford arguing that an exploding gas tank was “expressive conduct”—that drivers chose the Pinto to make a statement, and therefore safety regulation would violate Ford’s free speech rights. No court would take that seriously. A gas tank is not an opinion. It’s an engineered component with known risks and risks that were known to the manufacturer.

AI platforms are the same. When harm flows from design decisions—how content is ranked, how users are nudged, how systems optimize for engagement—that’s not speech. That’s product design. You can measure it, test it, audit it, which they do and make it safer which they don’t.

This part of Senator Blackburn’s bill matters because platform design shapes culture, careers, and livelihoods. Algorithms decide what gets seen, what gets buried, and what gets exploited. Blackburn’s bill doesn’t solve every problem, but it takes an important step: it says tech companies can’t hide dangerous products behind free-speech rhetoric anymore.

If you build it, and it predictably hurts people, you’re responsible for fixing it. That’s not censorship. It’s accountability. And people like Marc Andreessen, Sam Altman, Elon Musk and David Sacks will hate it.

You must be logged in to post a comment.