When White House AI Czar David Sacks tweets confidently that “there will be no federal bailout for AI” because “five major frontier model companies” will simply replace each other, he is not speaking as a neutral observer. He is speaking as a venture capitalist with overlapping financial ties to the very AI companies now engaged in the most circular investment structure Silicon Valley has engineered since the dot-com bubble—but on a scale measured not in millions or even billions, but in trillions.

Sacks is a PayPal alumnus turned political-tech kingmaker who has positioned himself at the intersection of public policy and private AI investment. His recent stint as a Special Government Employee to the federal government raised eyebrows precisely because of this dual role. Yet he now frames the AI sector as a robust ecosystem that can absorb firm-level failure without systemic consequence.

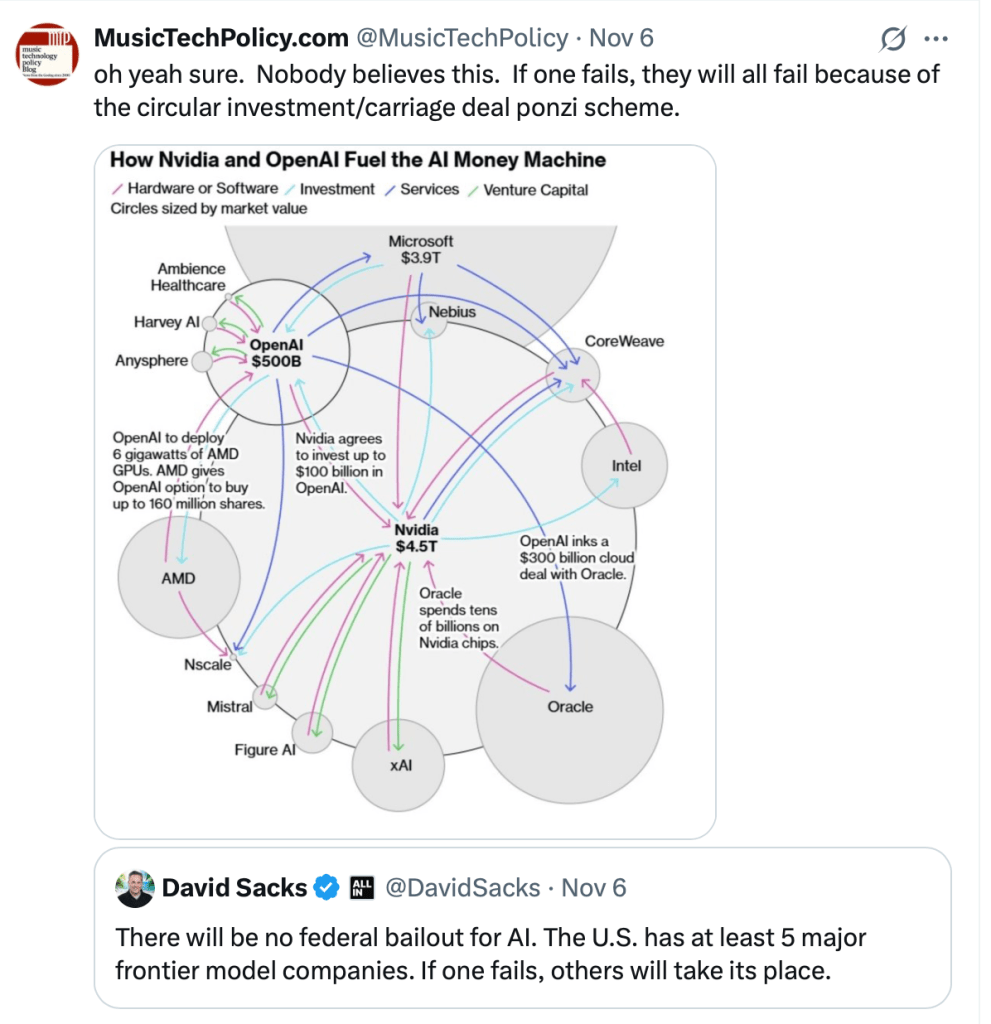

The numbers say otherwise. The diagram circulating in the X-thread exposes the real structure: mutually dependent investments tied together through cross-equity stakes, GPU pre-purchases, cloud-compute lock-ins, and stock-option-backed revenue games. So Microsoft invests in OpenAI; OpenAI pays Microsoft for cloud resources; Microsoft books the revenue and inflates its stake OpenAI. Nvidia invests in OpenAI; OpenAI buys tens of billions in Nvidia chips; Nvidia’s valuation inflates; and that valuation becomes the collateral propping up the entire sector. Oracle buys Nvidia chips; OpenAI signs a $300 billion cloud deal with Oracle; Oracle books the upside. Every player’s “growth” relies on every other player’s spending.

This is not competition. It is a closed liquidity loop. And it’s a repeat of the dot-bomb “carriage” deals that contributed to the stock market crash in 2000.

And underlying all of it is the real endgame: a frantic rush to secure taxpayer-funded backstops—through federal energy deals, subsidized data-center access, CHIPS-style grants, or Department of Energy land leases—to pay for the staggering infrastructure costs required to keep this circularity spinning. The singularity may be speculative, but the push for a public subsidy to sustain it is very real.

Call it what it is: an industry searching for a government-sized safety net while insisting it doesn’t need one.

In the meantime, the circular investing game serves another purpose: it manufactures sky-high paper valuations that can be recycled into legal war chests. Those inflated asset values are now being used to bankroll litigation and lobbying campaigns aimed at rewriting copyright, fair use, and publicity law so that AI firms can keep strip-mining culture without paying for it.

The same feedback loop that props up their stock prices is funding the effort to devalue the work of every writer, musician, actor, and visual artist on the planet—and to lock that extraction in as a permanent feature of the digital economy.

You must be logged in to post a comment.