Every few months, an AI company wins a procedural round in court or secures a sympathetic sound bite about “transformative fair use.” Within hours, the headlines declare a new doctrine of spin: the right to train AI on copyrighted works. But let’s be clear — no such right exists and probably never will. That doesn’t mean they won’t keep trying.

A “right to train” is not found anywhere in the Copyright Act or any other law. It’s also not found in court cases on fair-use that the AI lobby leans on. It’s a slogan and it’s spin, not a statute. What we’re watching is a coordinated effort by the major AI labs to manufacture a safe harbor through litigation — using every favorable fair-use ruling to carve out what looks like a precedent for blanket immunity. Then they’ll get one of their shills in Congress or a state legislature to introduce legislation as though a “right to train” was there all along.

How the “Right to Train” Narrative Took Shape

The phrase first appeared in tech-industry briefs and policy papers describing model training as a kind of “machine learning fair use.” The logic goes like this: since humans can read a book and learn from it, a machine should be able to “learn” from the same book without permission.

That analogy collapses under scrutiny. First of all, humans typically bought the book they read or checked it out from a library. Humans don’t make bit-for-bit copies of everything they read, and they don’t reproduce or monetize those copies at global scale. AI training does exactly that — storing expressive works inside model weights, then re-deploying them to generate derivative material.

But the repetitive chant of the term “right to train” serves a purpose: to normalize the idea that AI companies are entitled to scrape, store, and replicate human creativity without consent. Each time a court finds a narrow fair-use defense in a context that doesn’t involve piracy or derivative outputs (because they lose on training on stolen goods like in the Anthropic and Meta cases), the labs and their shills trumpet it as proof that training itself is categorically protected. It isn’t and no court has ever ruled that it is and likely never will.

Fair Use Is Not a Safe Harbor

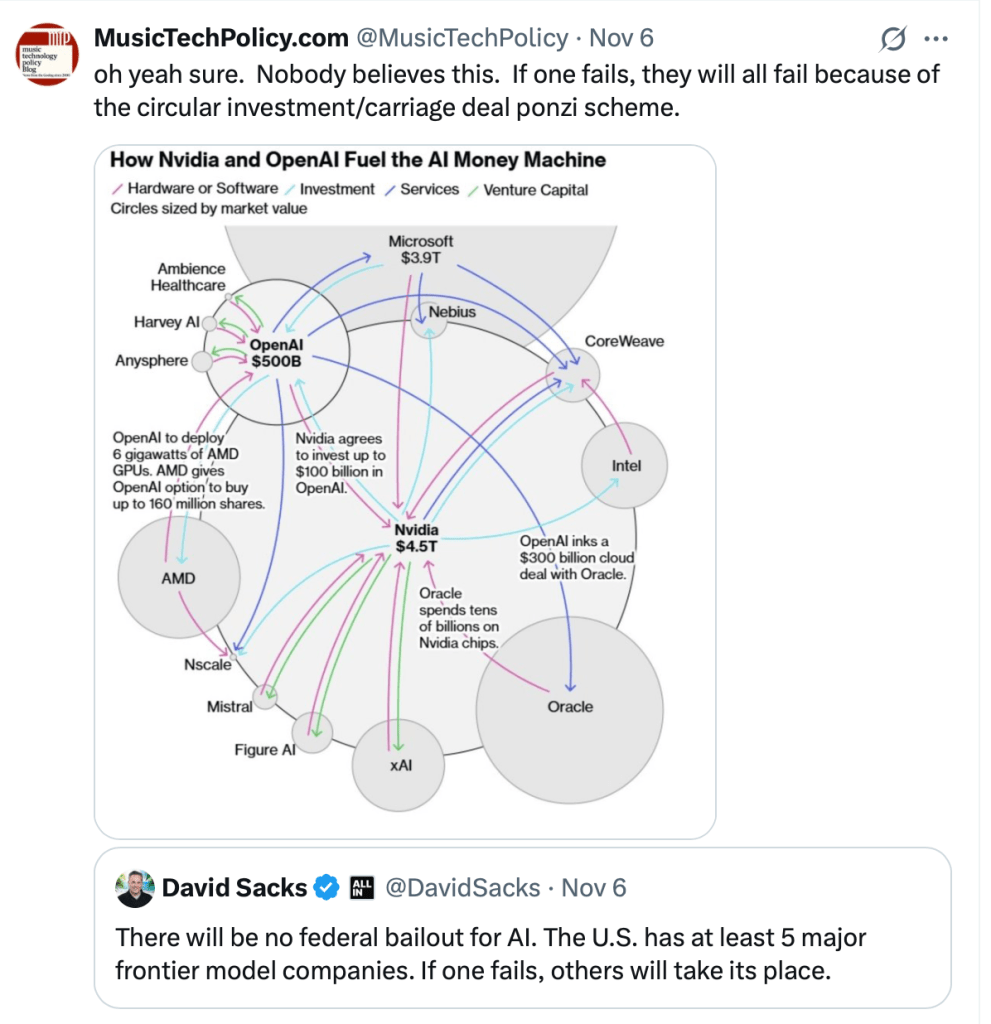

Fair use is a case-by-case defense to copyright infringement, not a standing permission slip. It weighs purpose, amount, transformation, and market effect — all of which vary depending on the facts. But AI companies are trying to convert that flexible doctrine into a brand new safe harbor: a default assumption that all training is fair use unless proven otherwise. They love a safe harbor in Silicon Valley and routinely abuse them like Section 230, the DMCA and Title I of the Music Modernization Act.

That’s exactly backward. The Copyright Office’s own report makes clear that the legality of training depends on how the data was acquired and what the model does with it. A developer who trains on pirated or paywalled material like Anthropic, Meta and probably all of them to one degree or another, can’t launder infringement through the word “training.”

Even if courts were to recognize limited fair use for truly lawful training, that protection would never extend to datasets built from pirate websites, torrent mirrors, or unlicensed repositories like Sci-Hub, Z-Library, or Common Crawl’s scraped paywalls—more on the scummy Common Crawl another time. The DMCA’s safe harbors don’t protect platforms that knowingly host stolen goods — and neither would any hypothetical “right to train.”

Yet a safe harbor is precisely what the labs are seeking: a doctrine that would retroactively bless mass infringement like Spotify got in the Music Modernization Act and preempt accountability for the sources they used.

And not only do they want a safe harbor — they want it for free. No licenses, no royalties, no dataset audits, no compensation. What do they want? FREE STUFF. When do they want it? NOW! Just blanket immunity, subsidized by every artist, author, and journalist whose work they ingested without consent or payment.

The Real Motive Behind the Push

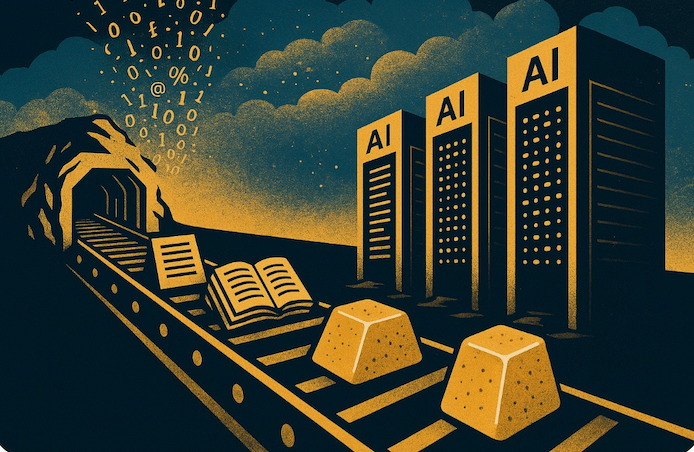

The reason AI companies need a “right to train” is simple: without it, they have no reliable legal basis for the data that powers their models and they are too cheap to pay and to careless to take the time to license. Most of their “training corpora” were built years before any licenses were contemplated — scraped from the open web, archives, and pirate libraries under the assumption that no one would notice.

This is particularly important for books. Training on books is vital for AI models because books provide structured, high-quality language, complex reasoning, and deep cultural context. They teach models coherence, logic, and creativity that short-form internet text lacks. Without books, AI systems lose depth, nuance, and the ability to understand sustained argument, narrative, and style.

Without books, AI labs have no business. That’s why they steal books. Very simple, really.

Now that creators are suing, the labs are trying to reverse-engineer legitimacy. They want to turn each court ruling that nudges fair use in their direction into a brick in the wall of a judicially-manufactured safe harbor — one that Congress never passed and rights-holders never agreed to and would never agree to.

But safe harbors are meant to protect good-faith intermediaries who act responsibly once notified of infringement. AI labs are not intermediaries; they are direct beneficiaries. Their entire business model depends on retaining the stolen data permanently in model weights that cannot be erased. The “right to train” is not a right — it’s a rhetorical weapon to make theft sound inevitable and a demand from the richest corporations in commercial history for yet another government-sponsored subsidy of infringement by bad actors.

The Myth of the Inevitable Machine

AI’s defenders claim that training on copyrighted works is as natural as human learning. But there’s nothing natural about hoarding other people’s labor at planetary scale and calling it innovation. The truth is simpler: the “right to train” is a marketing term invented to launder unlawful data practices into respectability.

If courts and lawmakers don’t call it what it is — a manufactured, safe harbor for piracy to benefit some of the biggest free riders who ever snarfed down corporate welfare — then history will repeat itself. What Grokster tried to do with distribution, AI is trying to do with cognition: privatize the world’s creative output and claim immunity for the theft.

You must be logged in to post a comment.