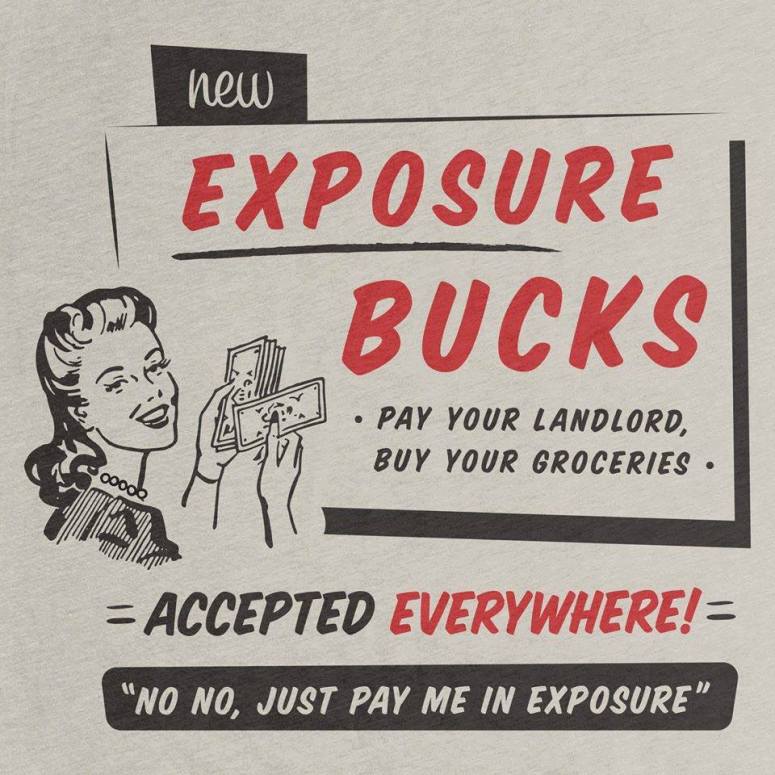

Spotify failed to consult any of the people who drive fans to the data abattoir: the musicians, artists, podcasters and authors.

Spotify has quietly tightened the screws on AI this summer—while simultaneously clarifying how it uses your data to power its own machine‑learning features. For artists, rightsholders, developers, and policy folks, the combination matters: Spotify is making it harder for outsiders to train models on Spotify data, even as it codifies its own first‑party uses like AI DJ and personalized playlists.

Spotify is drawing a bright line: no training models on Spotify; yes to Spotify training its own. If you’re an artist or developer, that means stronger contractual leverage against third‑party scrapers—but also a need to sharpen your own data‑governance and licensing posture. Expect other platforms in music and podcasting to follow suit—and for regulators to ask tougher questions about how platform ML features are audited, licensed, and accounted for.

Below is a plain‑English (hopefully) breakdown of what changed, what’s new or newly explicit, and the practical implications for different stakeholders.

Explicit ban on using Spotify to train AI models (third parties).

Spotify’s User Guidelines now flatly prohibit “crawling” or “scraping” the service and, crucially, “using any part of the Services or Content to train a machine learning or AI model.” That’s a categorical no for bots and bulk data slurps. The Developer Policy mirrors this: apps using the Web API may not “use the Spotify Platform or any Spotify Content to train a machine learning or AI model.” In short: if your product ingests Spotify data, you’re in violation of the rules and risk enforcement and access revocation.

Spotify’s own AI/ML uses are clearer—and broad.

The Privacy Policy (effective August 27, 2025) spells out that Spotify uses personal data to “develop and train” algorithmic and machine‑learning models to improve recommendations, build AI features (like AI DJ and AI playlists), and enforce rules. That legal basis is framed largely as Spotify’s “legitimate interests.” Translation: your usage, voice, and other data can feed Spotify’s own models.

The user content license is very broad.

If you post “User Content” (messages, playlist titles, descriptions, images, comments, etc.), you grant Spotify a worldwide, sublicensable, transferable, royalty‑free, irrevocable license to reproduce, modify, create derivative works from, distribute, perform, and display that content in any medium. That’s standard platform drafting these days, but the scope—including derivative works—has AI‑era consequences for anything you upload to or create within Spotify’s ecosystem (e.g., playlist titles, cover images, comments).

Anti‑manipulation and anti‑automation rules are baked in.

The User Guidelines and Developer Policy double down on bans against bots, artificial streaming, and traffic manipulation. If you’re building tools that touch the Spotify graph, treat “no automated collection, no metric‑gaming, no derived profiling” as table stakes—or risk enforcement, up to termination of access.

Data‑sharing signals to rightsholders continue.

Spotify says it can provide pseudonymized listening data to rightsholders under existing deals. That’s not new, but in the ML context it underscores why parallel data flows to third parties are tightly controlled: Spotify wants to be the gateway for data, not the faucet you can plumb yourself.

What this means by role:

• Artists & labels: The AI‑training ban gives you a clear contractual hook against services that scrape Spotify to build recommenders, clones, or vocal/style models. Document violations (timestamps, IPs, payloads) and send notices citing the User Guidelines and Developer Policy. Meanwhile, assume your own usage and voice interactions can be used to improve Spotify’s models—something to consider for privacy reviews and internal policies.

• Publishers and collecting societies: The combination of “no third‑party training” + “first‑party ML training” is a policy trend to watch across platforms. It raises familiar questions about derivative data, model outputs, and whether platform machine learning features create new accounting categories—or require new audit rights—in future licenses.

• Policymakers: Read this as another brick in the “closed data/open model risk” wall. Platforms restrict external extraction while expanding internal model claims. That asymmetry will shape future debates over data‑access mandates, competition remedies, and model‑audit rights—especially where platform ML features may substitute for third‑party discovery tools.

Practical to‑dos

1) For rights owners: Add explicit “no platform‑sourced training” language in your vendor, distributor, or analytics contracts. Track and log known scrapers and third‑party tools that might be training off Spotify. Consider notice letters that cite the specific clauses.

2) For privacy and legal teams: Update DPIAs and data maps. Spotify’s Privacy Policy identifies “User Data,” “Usage Data,” “Voice Data,” “Message Data,” and more as inputs for ML features under legitimate interest. If you rely on Spotify data for compliance reports, make sure you’re only using permitted, properly aggregated outputs—not raw exports.

3) For users: I will be posting a guideline to how to clawback your data. I may not hit everything so always open to suggestions about whatever else that others spot.

Spotify’s terms give it very broad rights to collect, combine, and use your data (listening history, device/ads data, voice features, third-party signals) for personalization, ads, and product R&D. They also take a broad license to user content you upload (e.g., playlist art).

Key cites

• User Guidelines: prohibition on scraping and on “using any part of the Services or Content to train a machine learning or AI model.”

• Developer Policy (effective May 15, 2025): “Do not use the Spotify Platform or any Spotify Content to train a machine learning or AI model…” Also bans analyzing Spotify content to create new/derived listenership metrics or user profiles for ad targeting.

• Privacy Policy (effective Aug. 27, 2025): Spotify uses personal data to “develop and train” ML models for recommendations, AI DJ/AI playlists, and rule‑enforcement, primarily under “legitimate interests.”

• Terms & Conditions of Use: very broad license to Spotify for any “User Content” you post, including the right to “create derivative works” and to use content by any means and media worldwide, irrevocably.

[A version of this post first appeared on MusicTechPolicy]

You must be logged in to post a comment.