There’s a new dance in Washington—it’s called the KowTow

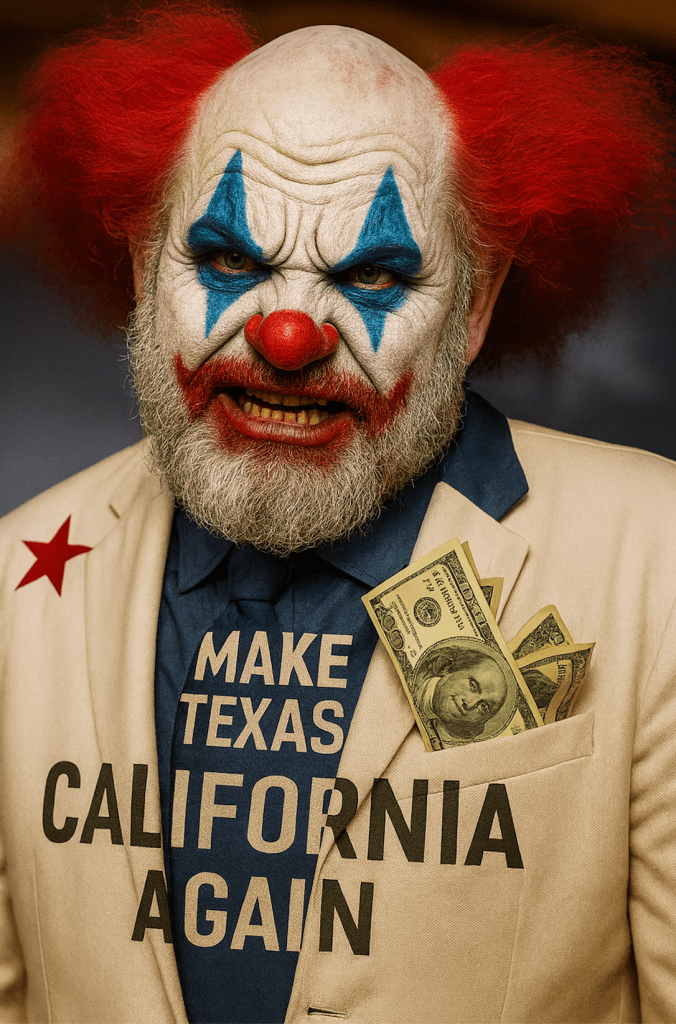

Most musicians don’t spend their days thinking about executive orders. But if you care about your rights, your recordings, your royalties, or your community, or even the environment, you need to understand the Trump Administration’s new executive order on artificial intelligence. The order—presented as “Ensuring a National Policy Framework for AI”—is not a national standard at all. It is a blueprint for stripping states of their power, protecting Big Tech from accountability, and centralizing AI authority in the hands of unelected political operatives and venture capitalists. In other words, it’s business as usual for the special interests led by an unelected bureaucrat, Silicon Valley Viceroy and billionaire investor David Sacks who the New York Times recently called out as a walking conflict of interest.

You’ll Hear “National AI Standard.” That’s Fake News. IT’s Silicon valley’s wild west

Supporters of the EO claim Trump is “setting a national framework for AI.” Read it yourself. You won’t find a single policy on:

– AI systems stealing copyrights (already proven in court against Anthropic and Meta)

– AI systems inducing self-harm in children

– Whether Google can build a water‑burning data center or nuclear plant next to your neighborhood

None of that is addressed. Instead, the EO orders the federal government to sue and bully states like Florida and Texas that pass AI safety laws and threatens to cut off broadband funding unless states abandon their democratically enacted protections. They will call this “preemption” which is when federal law overrides conflicting state laws. When Congress (or sometimes a federal agency) occupies a policy area, states lose the ability to enforce different or stricter rules. There is no federal legislation (EOs don’t count), so there can be no “preemption.”

Who Really Wrote This? The Sacks–Thierer Pipeline

This EO reads like it was drafted directly from the talking points of David Sacks and Adam Thierer, the two loudest voices insisting that states must be prohibited from regulating AI. It sounds that way because it was—Trump himself gave all the credit to David Sacks in his signing ceremony.

– Adam Thierer works at Google’s R Street Institute and pushes “permissionless innovation,” meaning companies should be allowed to harm the public before regulation is allowed.

– David Sacks is a billionaire Silicon Valley investor from South Africa with hundreds of AI and crypto investments, documented by The New York Times, and stands to profit from deregulation.

Worse, the EO lards itself with references to federal agencies coordinating with the “Special Advisor for AI and Crypto,” who is—yes—David Sacks. That means DOJ, Commerce, Homeland Security, and multiple federal bodies are effectively instructed to route their AI enforcement posture through a private‑sector financier.

The Trump AI Czar—VICEROY Without Senate Confirmation

Sacks is exactly what we have been warning about for months: the unelected Trump AI Czar

He is not Senate‑confirmed.

He is not subject to conflict‑of‑interest vetting.

He is a billionaire “special government employee” with vast personal financial stakes in the outcome of AI deregulation.

Under the Constitution, you cannot assign significant executive authority to someone who never faced Senate scrutiny. Yet the EO repeatedly implies exactly that.

Even Trump’s MOST LOYAL MAGA Allies Know This Is Wrong

Trump signed the order in a closed ceremony with sycophants and tech investors—not musicians, not unions, not parents, not safety experts, not even one Red State governor.

Even political allies and activists like Mike Davis and Steve Bannon blasted the EO for gutting state powers and centralizing authority in Washington while failing to protect creators. When Bannon and Davis are warning you the order goes too far, that tells you everything you need to know. Well, almost everything.

And Then There’s Ted Cruz

On top of everything else, the one state official in the room was U.S. Senator Ted Cruz of Texas, a state that has led on AI protections for consumers. Cruz sold out Texas musicians while gutting the Constitution—knowing full well exactly what he was doing as a former Supreme Court clerk.

Why It Matters for Musicians

AI isn’t some abstract “tech issue.” It’s about who controls your work, your rights, your economic future. Right now:

– AI systems train on our recordings without consent or compensation.

– Major tech companies use federal power to avoid accountability.

– The EO protects Silicon Valley elites, not artists, fans or consumers.

This EO doesn’t protect your music, your rights, or your community. It preempts local protections and hands Big Tech a federal shield.

It’s Not a National Standard — It’s a Power Grab

What’s happening isn’t leadership. It’s *regulatory capture dressed as patriotism*. If musicians, unions, state legislators, and everyday Americans don’t push back, this EO will become a legal weapon used to silence state protections and entrench unaccountable AI power.

What David Sacks and his band of thieves is teaching the world is that he learned from Dot Bomb 1.0—the first time around, they didn’t steal enough. If you’re going to steal, steal all of it. Then the government will protect you.

You must be logged in to post a comment.