Silicon Valley Loses Bigly

In a symbolic vote that spoke volumes, the U.S. Senate decisively voted 99–1 to strike the toxic AI safe harbor moratorium from the vote-a-rama for the One Big Beautiful Bill Act (HR 1) according to the AP. Senator Ted Cruz, who had previously actively supported the measure, actually joined the bipartisan chorus in stripping it — an acknowledgment that the proposal had become politically radioactive.

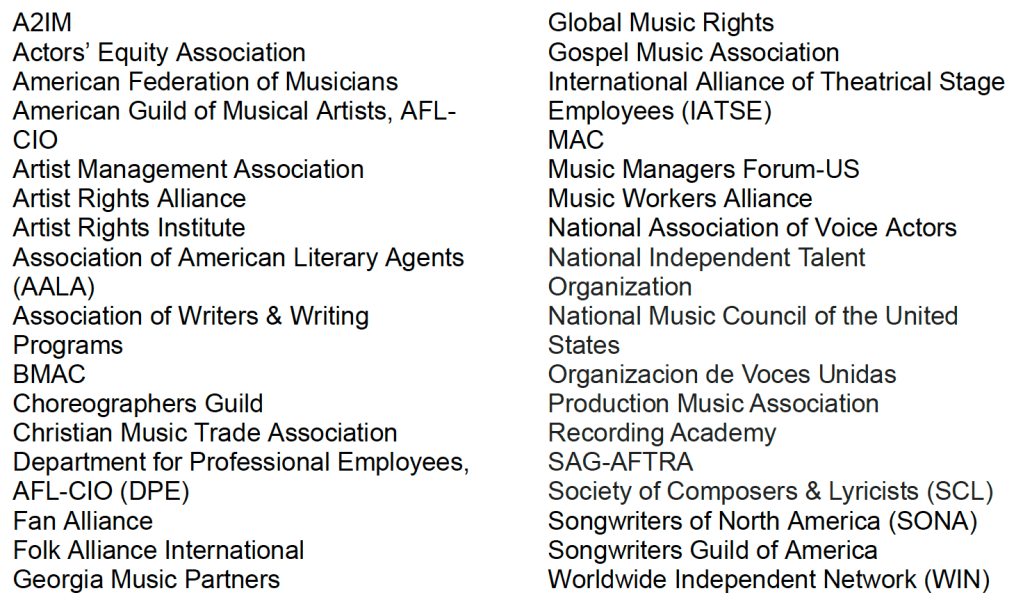

To recap, the AI moratorium would have barred states from regulating artificial intelligence for up to 10 years, tying access to broadband and infrastructure funds to compliance. It triggered an immediate backlash: Republican governors, state attorneys general, parents’ groups, civil liberties organizations, and even independent artists condemned it as a blatant handout to Big Tech with yet another rent-seeking safe harbor.

Marsha Blackburn and Maria Cantwell to the Rescue

Credit where it’s due: Senator Marsha Blackburn (R–TN) was the linchpin in the Senate, working across the aisle with Sen. Maria Cantwell to introduce the amendment that finally killed the provision. Blackburn’s credibility with conservative and tech-wary voters gave other Republicans room to move — and once the tide turned, it became a rout. Her leadership was key to sending the signal to her Republican colleagues–including Senator Cruz–that this wasn’t a hill to die on.

Top Cover from President Trump?

But stripping the moratorium wasn’t just a Senate rebellion. This kind of reversal in must-pass, triple whip legislation doesn’t happen without top cover from the White House, and in all likelihood, Donald Trump himself. The provision was never a “last stand” issue in the art of the deal. Trump can plausibly say he gave industry players like Masayoshi Son, Meta, and Google a shot, but the resistance from the states made it politically untenable. It was frankly a poorly handled provision from the start, and there’s little evidence Trump was ever personally invested in it. He certainly didn’t make any public statements about it at all, which is why I always felt it was such an improbable deal point that it was always intended as a bargaining chip whether the staff knew it or not.

One thing is for damn sure–it ain’t coming back in the House which is another way you know you can stick a fork in it despite the churlish shillery types who are sulking off the pitch.

One final note on the process: it’s unfortunate that the Senate Parliamentarian made such a questionable call when she let the AI moratorium survive the Byrd Bath, despite it being so obviously not germane to reconciliation. The provision never should have made it this far in the first place — but oh well. Fortunately, the Senate stepped in and did what the process should have done from the outset.

Now what?

It ain’t over til it’s over. The battle with Silicon Valley may be over on this issue today, but that’s not to say the war is over. The AI moratorium may reappear, reshaped and rebranded, in future bills. But its defeat in the Senate is important. It proves that state-level resistance can still shape federal tech policy, even when it’s buried in omnibus legislation and wrapped in national security rhetoric.

Cruz’s shift wasn’t a betrayal of party leadership — it was a recognition that even in Washington, federalism still matters. And this time, the states — and our champion Marsha — held the line.

Brava, madam. Well played.

This post first appeared on MusicTechPolicy

You must be logged in to post a comment.