For Immediate Release

HUMAN ARTISTRY CAMPAIGN ENDORSES NO FAKES ACT

Bipartisan Bill Reintroduced by Senators Blackburn, Coons, Tillis, & Klobuchar and Representatives Salazar, Dean, Moran, Balint and Colleagues

Create New Federal Right for Use of Voice and Visual Likeness

in Digital Replicas

Empowers Artists, Voice Actors, and Individual Victims to Fight Back Against

AI Deepfakes and Voice Clones

WASHINGTON, DC (April 9, 2025) – Amid global debate over guardrails needed for AI, the Human Artistry Campaign today announced its support for the reintroduced “Nurture Originals, Foster Art, and Keep Entertainment Safe Act of 2025” (“NO FAKES Act”) – landmark legislation giving every person an enforceable new federal intellectual property right in their image and voice.

Building off the original NO FAKES legislation introduced last Congress, the updated bill was reintroduced today by Senators Marsha Blackburn (R-TN), Chris Coons (D-DE), Thom Tillis (R-NC), Amy Klobuchar (D-MN) alongside Representatives María Elvira Salazar (R-FL-27), Madeleine Dean (D-PA-4), Nathaniel Moran (R-TX-1), and Becca Balint (D-VT-At Large) and bipartisan colleagues.

The legislation sets a strong federal baseline protecting all Americans from invasive AI-generated deepfakes flooding digital platforms today. From young students bullied by non-consensual sexually explicit deepfakes to families scammed by voice clones to recording artists and performers replicated to sing or perform in ways they never did, the NO FAKES Act provides powerful remedies requiring platforms to quickly take down unconsented deepfakes and voice clones and allowing rightsholders to seek damages from creators and distributors of AI models designed specifically to create harmful digital replicas.

The legislation’s thoughtful, measured approach preserves existing state causes of action and rights of publicity, including Tennessee’s groundbreaking ELVIS Act. It also contains carefully calibrated exceptions to protect free speech, open discourse and creative storytelling – without trampling the underlying need for real, enforceable protection against the vast range of invasive and harmful deepfakes and voice clones.

Human Artistry Campaign Senior Advisor Dr. Moiya McTier released the following statement in support of the legislation:

“The Human Artistry Campaign stands for preserving essential qualities of all individuals – beginning with a right to their own voice and image. The NO FAKES Act is an important step towards necessary protections that also support free speech and AI development. The Human Artistry Campaign commends Senators Blackburn, Coons, Tillis, and Klobuchar and Representatives Salazar, Dean, Moran, Balint, and their colleagues for shepherding bipartisan support for this landmark legislation, a necessity for every American to have a right to their own identity as highly realistic voice clones and deepfakes become more pervasive.”

Dr. Moiya McTier, Human Artistry Campaign Senior Advisor

By establishing clear rules for the new federal voice and image right, the NO FAKES Act will power innovation and responsible, pro-human uses of powerful AI technologies while providing strong protections for artists, minors and others. This important bill has cross-sector support from Human Artistry Campaign members and companies such as OpenAI, Google, Amazon, Adobe and IBM. The NO FAKES Act is a strong step forward for American leadership that erects clear guardrails for AI and real accountability for those who reject the path of responsibility and consent.

Learn more & let your representatives know Congress should pass NO FAKES Act here.

# # #

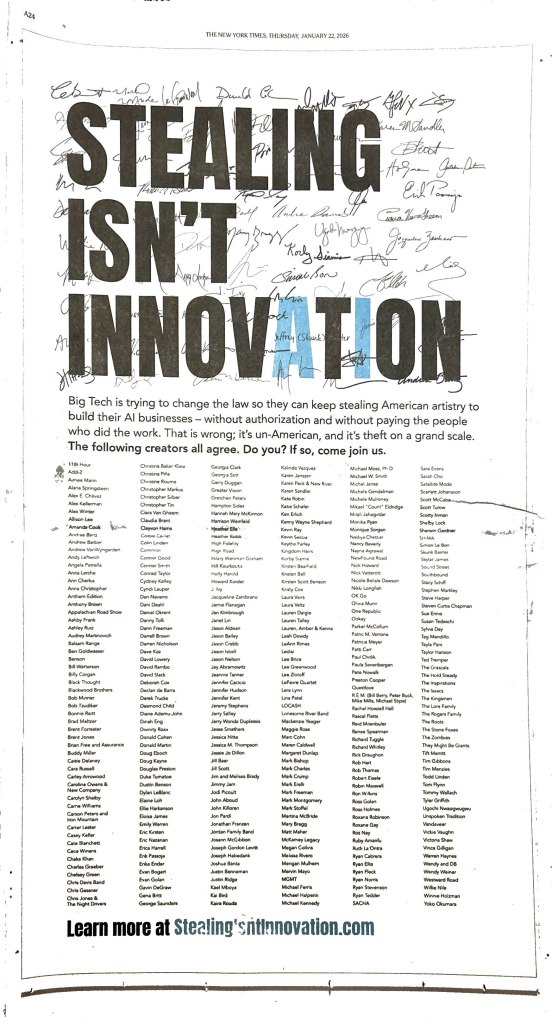

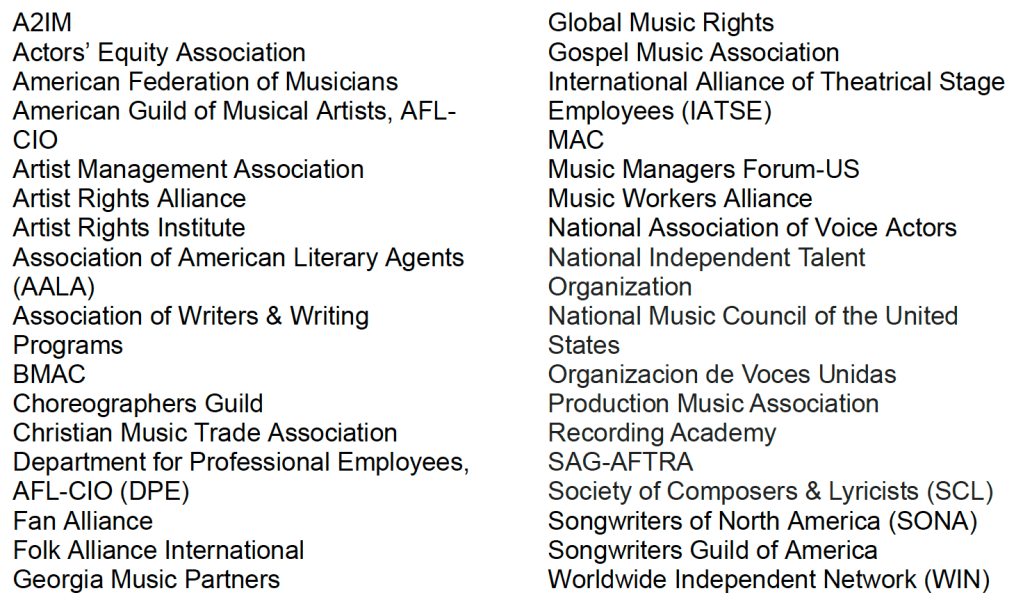

ABOUT THE HUMAN ARTISTRY CAMPAIGN: The Human Artistry Campaign is the global initiative for the advancement of responsible AI – working to ensure it develops in ways that strengthen the creative ecosystem, while also respecting and furthering the indispensable value of human artistry to culture. Across 34 countries, more than 180 organizations have united to protect every form of human expression and creative endeavor they represent – journalists, recording artists, photographers, actors, songwriters, composers, publishers, independent record labels, athletes and more. The growing coalition champions seven core principles for keeping human creativity at the center of technological innovation. For further information, please visit humanartistrycampaign.com

You must be logged in to post a comment.